Metaspectral Deep Learning Model Achieves State-of-the-Art Performances on Toulouse Hyperspectral Dataset Benchmark

on Thu Dec 18 2025

Guillaume

The Toulouse Hyperspectral Dataset (Thoreau et al., 2024) provides a benchmark for validating pixel-wise classification techniques in challenging, uneven classes and limited-label environments. The dataset, acquired over Toulouse (France), using the airborne AisaFENIX sensor, features a very high spatial resolution (1 m Ground Sampling Distance) and 310 contiguous spectral channels spanning the 400 nm to 2500 nm range. This rich spectral information, combined with a sparse but highly detailed ground truth of 32 land cover classes, makes it ideal for testing model performances and generalization. The original paper’s baseline employed a two-stage approach involving a self-supervised Masked Autoencoder (MAE), to learn spectral representations, followed by a Random Forest (RF) classifier (MAE+RF). It achieved an overall accuracy of 0.85 and an F1 score of 0.77.

Metaspectral is frequently asked about the performance of its Deep Learning (DL) CNN models that were specifically developed for pixel-wise classification, target detection, regression and unmixing tasks on hyperspectral data. Here we sought to demonstrate the efficiency and predictive power of our CNN classifier. We benchmarked our single-stage supervised model against the established MAE+RF pipeline. Our results validate that Metaspectral’s CNN not only maintains competitive performance even with the smallest labeled data subset but also surpasses the original F1 score baseline by a significant margin when trained on all available labeled data. This achievement positions Metaspectral’s model as state-of-the-art for this benchmark. The data and results presented here can be accessed directly on the Clarity sandbox.

Experimental Setup

To ensure robust evaluation, the dataset utilizes 8 spatially disjoint splits. Each split is divided into:

- Unlabeled Pool: ~ 2.6 million truly unlabeled pixels. This vast pool was used by the MAE for pre-training. It was not used at all by our CNN.

- Labeled Training Set: The small subset used for supervised training of the final classifier in the paper’s baseline (~ 13% of total labeled pixels).

- Labeled Pool: An additional, spatially disjoint subset of labeled pixels designated for self-supervised training, active learning, or direct supervised training (accounting for ~ 29% of total labeled pixels).

- Test Set: Used for final evaluation (fixed in all experiments).

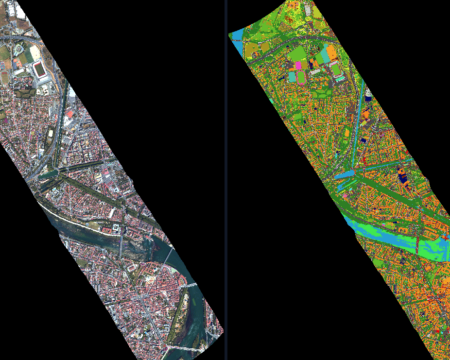

These splits can be reproduced with the code provided on the TlseHypDataSet GitHub repository. Each split was uploaded on Metaspectral’s Clarity platform to create a corresponding Dataset. Clarity gives an overview of the Dataset through the first and second PCA components (Figure 1) for the train, validation and test subsets. The dataset for split #5 can be visualized on the Clarity sandbox here.

Original Baseline (RF and self-supervised MAE+RF)

Thoreau et al. (2024) first present a RF model trained using the Labeled Training Set to provide a fully supervised baseline. Subsequently, an MAE was pre-trained using the self-supervised masked reconstruction pretext task, a method where the model is forced to reconstruct the full input spectrum given only a subset of spectral channels. This process compels the encoder to learn robust, low-dimensional representations of the data’s intrinsic chemical and material properties from the unmasked regions of the input spectra, leveraging the total available data from the Unlabeled Pool, Labeled Pool, and Labeled Training Set for pre-training. It is worth pointing out that the MAE’s performance relies on the diversity of the spectral data it sees, and the Labeled Pool provides crucial spectral diversity across all classes. Therefore, the labels, while not used directly in the MAE’s reconstruction loss function, are used indirectly to ensure the necessary spectral diversity is present in the data. Finally, the MAE + RF classifier is trained on the MAE embeddings.

Metaspectral’s Supervised Approach (CNN)

Metaspectral’s Deep Learning CNN was designed for spectral classification and trained end-to-end (feature extraction and classification jointly optimized) under two distinct scenarios:

- Scenario A: The CNN was trained only on the ~ 13% Labeled Training Set, matching the exact supervised data input used by the paper’s RF model baseline.

- Scenario B: The CNN was trained on the combined set of the Labeled Training Set and the Labeled Pool, utilizing approximately 42% of the total labeled pixels. This includes some of the information provided to the MAE but does not utilize the spectral data from the Unlabeled Pool.

Results and Discussion

All benchmark results are the ones of the test set, presented as averages across all splits (in the paper as well as in the results presented below). As shown in table 1, Metaspectral’s model led to an improvement of the F1 score over both the RF and MAE+RF baselines, demonstrating and validating the efficiency and predictive power of our optimized single-stage deep learning architecture.

| Model | Data Pools | OA | F1 score |

| RF (paper) | Labeled Training Set | 0.75 | 0.65 |

| MAE+RF (paper) | Labeled Training Set with MAE pre-trained on all pools | 0.85 | 0.77 |

| Metaspectral’s CNN (Scenario A) | Labeled Training Set | 0.79 | 0.78 |

Metaspectral’s CNN (Scenario B) | Labeled Training Set + Labeled Pool | 0.85 | 0.84 |

When comparing the models, it can be noticed that Metaspectral’s Scenario A CNN achieved an F1 score of 0.78 using only the ~13% Labeled Training Set. This model immediately surpassed the F1 score performance not only of the RF but also of the two-stage MAE+RF pipeline (F1=0.77).

Another notable observation is that both the MAE+RF baseline and Metaspectral’s CNN in Scenario B achieved the same Overall Accuracy (OA) of 0.85. However, the Metaspectral CNN yielded a significantly higher F1 score (0.84 vs. 0.77). This distinction is important for the Toulouse dataset, which exhibits a long-tailed class distribution. Since OA is dominated by a model’s performance on the majority classes, the superior F1 score (the harmonic mean of precision and recall) confirms that the CNN architecture provides better predictive reliability across all 32 land cover classes, and particularly the minority classes.

Finally, the increase of the F1 score in Metaspectral’s Scenario B of 7% above the RF+MAE baseline model suggests that the most valuable information within the available training data is the high-quality ground-truth label rather than the unlabelled data pool, which the CNN architecture exploits maximally through end-to-end learning. The relative lower F1 score of the MAE+RF pipeline compared to both scenarios could potentially be attributed to two factors. First, while the MAE effectively learned generalized spectral features from the vast UP (contributing to the high OA), these features may not have been optimally disentangled or precise enough to robustly separate the minority, long-tailed classes, which are critical for the F1 score. A structural factor potentially limiting the MAE’s efficacy is the high spectral collinearity inherent in hyperspectral data. Second, the use of an RF classifier in the second stage of the baseline pipeline decouples the feature extraction (MAE) from the final classification task.

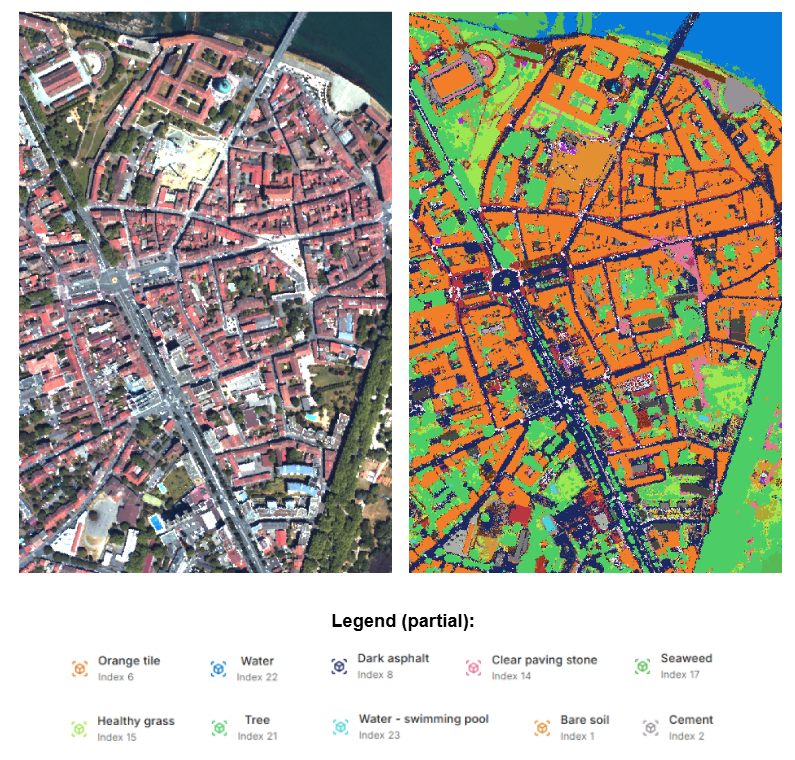

The dataset split #5 is the one that yielded the best model with OA and F1 score both of 0.89. Model results can be visualized on the Clarity sandbox here. An example of classification inference is given in Figure 2 below and is also available on the Clarity sandbox for two entire AisaFENIX images.

Conclusions

In conclusion, Metaspectral’s Deep Learning CNN sets a new performance standard for pixel-wise classification on the Toulouse Hyperspectral Dataset. Our average F1 score of 0.84 is attributable to the architectural strength and efficacy of maximizing high-quality labeled data in a fully supervised training regime.